Apple iPhone’s Voice-to-Text Feature Sparks Controversy Over Political Misinterpretation

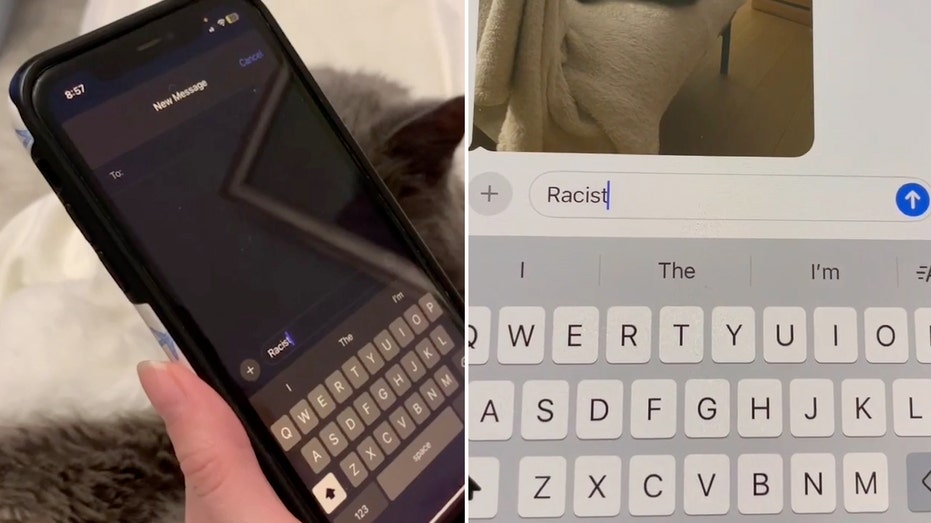

The iPhone’s voice-to-text dictation feature is drawing criticism after a user on TikTok showcased a peculiar glitch. In the viral video, when the user dictated the word “racist,” the software momentarily displayed “Trump” before correcting itself to “racist.”

Reproducing the Glitch

Fox News Digital confirmed the glitch by attempting to replicate the situation. Multiple trials showed that when users said “racist,” the dictation feature briefly flashed “Trump” before reverting to the correct word. Interestingly, this anomaly was not consistent; in some instances, the software produced unrelated words like “reinhold” and “you,” while in most cases, it accurately transcribed “racist.”

Apple’s Response to the Issue

An Apple spokesperson addressed the controversy, stating that the company is aware of the glitch affecting their speech recognition model. “We are rolling out a fix as soon as possible,” they assured. According to Apple, the underlying issue lies in the phonetic similarities between words, which may lead the model to suggest incorrect terms before settling on the right one. The glitch also seems to impact other words containing the “r” consonant.

Historical Context of Technology and Political Bias

This incident is not the first time technology has been accused of exhibiting bias against Donald Trump. In a separate case, Amazon’s Alexa faced backlash when it provided reasons for voting for then-Vice President Kamala Harris while refusing to offer similar responses for Trump. Following this controversy, Amazon representatives briefed the House Judiciary Committee, clarifying that Alexa employs pre-programmed manual overrides to respond to certain user inquiries.

For instance, when asked about reasons to support Trump or President Joe Biden, Alexa would respond, “I cannot provide content that promotes this specific political party or candidate.” The company admitted that manual overrides had not yet been programmed for Harris, as there had been limited user interest in her candidacy.

Swift Action from Amazon

After the viral video highlighting Alexa’s responses circulated, Amazon quickly acknowledged the issue and implemented a manual override for questions regarding Harris within two hours. Before this fix, a Fox News Digital inquiry received a response from Alexa that highlighted Harris’s identity and her plans to tackle racial injustice, which sparked further discussion about perceived political bias in tech.

Amazon’s Commitment to Neutrality

During the briefing with House staffers, Amazon expressed regret over the incident, emphasizing its commitment to preventing Alexa from exhibiting political bias. The tech giant stated that it failed to uphold this policy in the recent incident, prompting a comprehensive audit of its systems. As a result, Amazon has instituted manual overrides for all candidates and a variety of election-related questions, expanding beyond just presidential candidates.

In conclusion, as technology continues to evolve, the intersection of political discourse and voice recognition software raises important questions about bias and accuracy. It remains essential for tech companies to ensure their products reflect neutrality and reliability, particularly in politically charged contexts.