How Visual Categorization Aided a Museum Heist—and What It Reveals About AI Bias

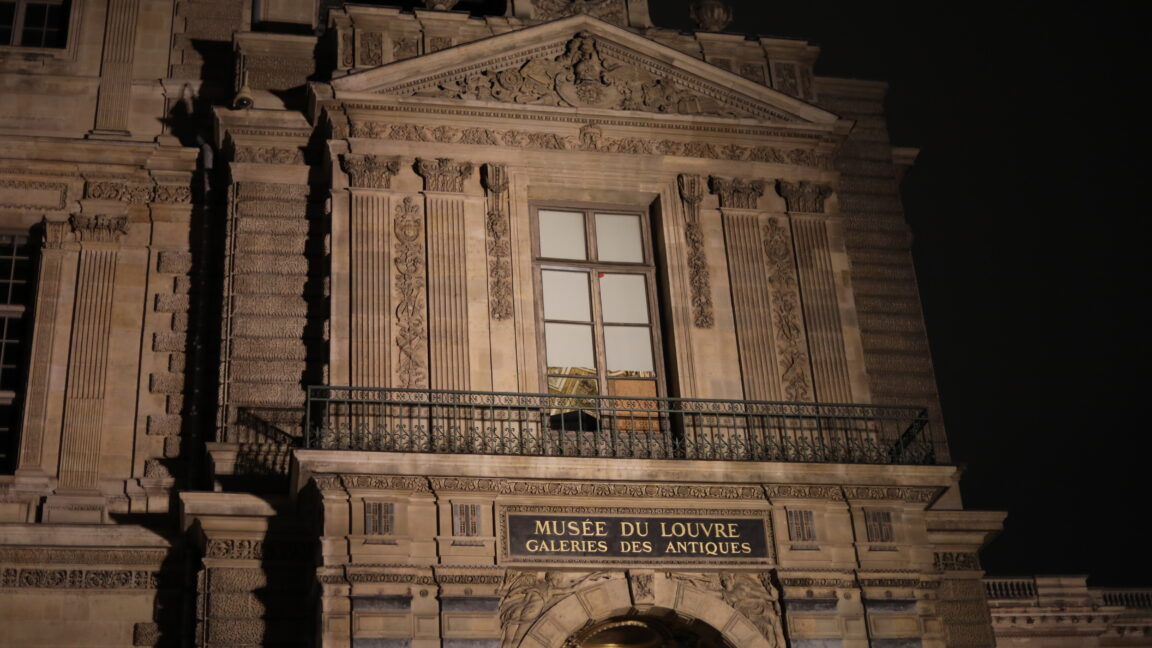

On October 19, 2025, four men executed a brazen theft at the Louvre, the world’s most-visited museum, stealing crown jewels valued at over 88 million euros in less than eight minutes. Despite extensive surveillance, the security system failed to detect their intrusion until it was too late.

The thieves disguised themselves as construction workers, arriving with a furniture lift to access a balcony overlooking the Seine. They wore hi-vis vests, blending seamlessly into the urban environment and exploiting common social cues that our brains, and AI systems, use to categorize individuals as “normal.”

According to sociologist Erving Goffman, people perform social roles by adopting cues that align with societal expectations. The robbers’ mastery of this “presentation of self” allowed them to slip past security unnoticed. This incident illustrates how human perception, and artificial intelligence alike, rely heavily on categorization—quickly filtering out what is deemed ‘ordinary’ and flagging what appears suspicious.

AI systems, such as facial recognition, learn patterns from data reflecting societal biases. They tend to over-scrutinize individuals who deviate from “norms,” often leading to racial or gender-based disparities. Just as security guards might overlook someone dressed as a worker, AI can overlook certain groups while over-focusing on others.

Interesting:

Experts emphasize that AI doesn’t create biases independently; it absorbs societal patterns embedded in training data. This means that flawed assumptions about normalcy can perpetuate discrimination, making both human and machine perception susceptible to error.

The Louvre heist underscores a critical point: our perceptions—whether human or algorithmic—are shaped by social categories, which can be manipulated. Before advancing AI’s visual recognition capabilities, we must scrutinize how our own perceptions influence what is seen and what is overlooked.

In the end, the robbers’ success was rooted in understanding the sociology of appearance—highlighting that conformity often masks risk. Their daylight theft was not just a matter of planning but a demonstration of how categorical thinking dominates both human judgment and machine learning.

As Vincent Charles from Queen’s University Belfast and Tatiana Gherman from the University of Northampton note, questioning our own visual assumptions is essential to developing fairer, more accurate AI systems.