Destiny Matrix Chart: How does it help uncover your destiny?

Destiny is one of the most mystical concepts that people have been trying to read for many millennia. We are always looking for clues and trying to figure out how chance and events give birth to our destiny. One of the tools that we use to decipher these mysteries is the Destiny Matrix Chart.

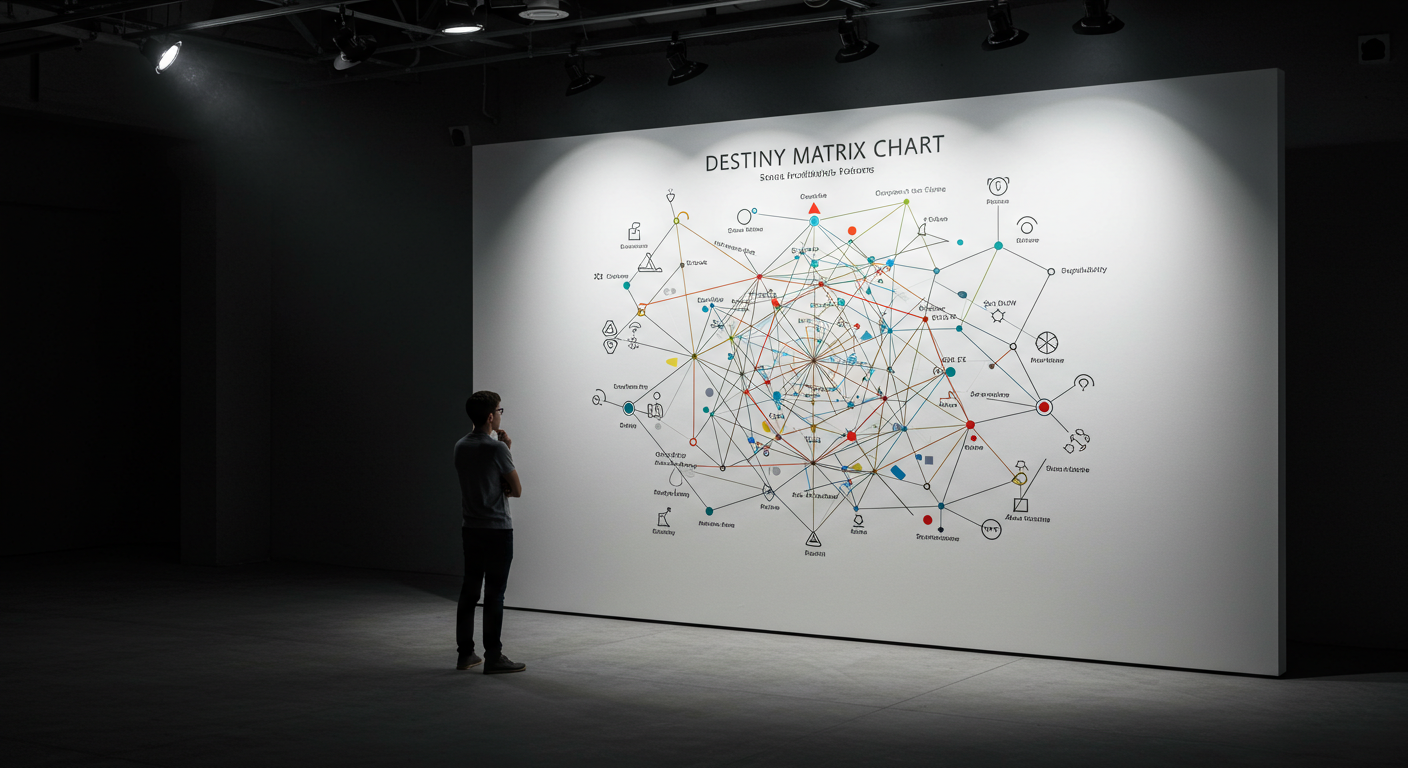

What is the Destiny Matrix Chart?

The Destiny Matrix Chart (or the Matrix of Destiny) is a good tool that gives the key to understanding personal life paths based on numbers. The methodology is based on the fact that research into a person’s date of birth and calculations determine the most important random points and possible sequences of changes in destiny. It is like a map that reflects different aspects of your life, such as career, personal relationships, health, and even your unconscious talents.

The structure of the Destiny Matrix

To understand the functionality of the Destiny Matrix Chart, you should first study the principle of compiling the Matrix. Matrix in the form of numerical values corresponding to your birthday. The network of numbers used to create this matrix is represented as different aspects of your life at different stages of life. For example, professional achievements, family, friends, and important years in your life can be represented.

The grid divides your DESTINY into separate sections according to their significance:

The Destiny Number is a basic number that is calculated based on your date of birth. It reflects your main life task.

Career Number – कहतо about your professional inclinations and opportunities for success in the chosen field.

Health Number – predicts how events related to your health will develop.

Love and Relationship Number – reveals aspects of personal relationships and compatibility with partners.

Example of a matrix:

How does the Destiny Matrix Chart work?

To create your own Destiny Matrix Chart, you must enter your exact date of birth. From this date, the calculator will perform calculations and give numerical values for each of these directions. These numbers are then placed in different sectors of the matrix. Based on the values of the numbers, you can draw conclusions about the most favorable directions, what can happen, and what area you should pay more attention to.

Skills for understanding numbers and their meaning

Each number in the matrix is interpreted in its own way. For example, if your destiny number is “5”, then it can indicate a desire for change and the need to be flexible. If your career number is “2”, then this can mean a predisposition to creativity or working with people. The number of love and relationships can reveal information about which partner will be most suitable for you, as well as what qualities you need to look for in a partner.

The point of understanding numbers is that it allows you to use the information from the Destiny Matrix Chart correctly to become successful and harmonious in life.

Using the Destiny Matrix Chart: Benefits

1. Predicting what is going to happen in life

Knowing what might happen in the future allows you to prepare for certain situations in advance. For example, if you know that at some point your matrix predicts possible difficulties at work, you can take steps in advance to avoid negative consequences.

2. Help with decision making

With the help of the Destiny Matrix Chart, you can figure out which option is most favorable in a given situation. It could be a career choice, a relationship with a partner, or a decision on an important life issue – the Destiny Matrix Chart can suggest the best path.

3. Developing personal strengths

The matrix can also help you better understand yourself, your talents and characteristics. You may feel that you cannot find your place in life, but by studying your matrix, hidden opportunities that you have not seen until now can be revealed.

4. Increased Awareness of Your Purpose

The Destiny Matrix gives you clarity and confidence that you did not end up on this path by accident. It gives you the opportunity to feel part of something bigger, realizing your purpose.

5. Harmonization of Relationships

Special attention should be paid to how the Destiny Matrix Chart can help in relationship matters. It is a valuable tool for compatibility analysis, which can suggest which partner will be the most harmonious, and which relationships can lead to problems.

How to use the Destiny Matrix Chart?

To make your Destiny Matrix Chart, just use the calculator on the website destinynums.com. After entering your date of birth, you will receive a personalized matrix that will reflect all the main aspects of your life.

Tips for interpreting the matrix

Before drawing conclusions, you need to pay attention to all the numbers of the matrix. You should not stop at one number, since it is necessary to take into account all aspects of life.

Each person has their own personal life path, and therefore you should not rely on a general forecast.